Page113

Serverless

Serverless is a confusingly named technology: it uses servers, but they belong to someone else. “Someone else’s server” is a better name, though admittedly far less catchy. It is also known as Functions as a Service (FaaS), which is more accurate. Serverless simply means code is sent to another server which returns the results. Popular serverless technologies include AWS (Amazon Web Services) Lambda, Azure Functions, and Google Cloud Functions.

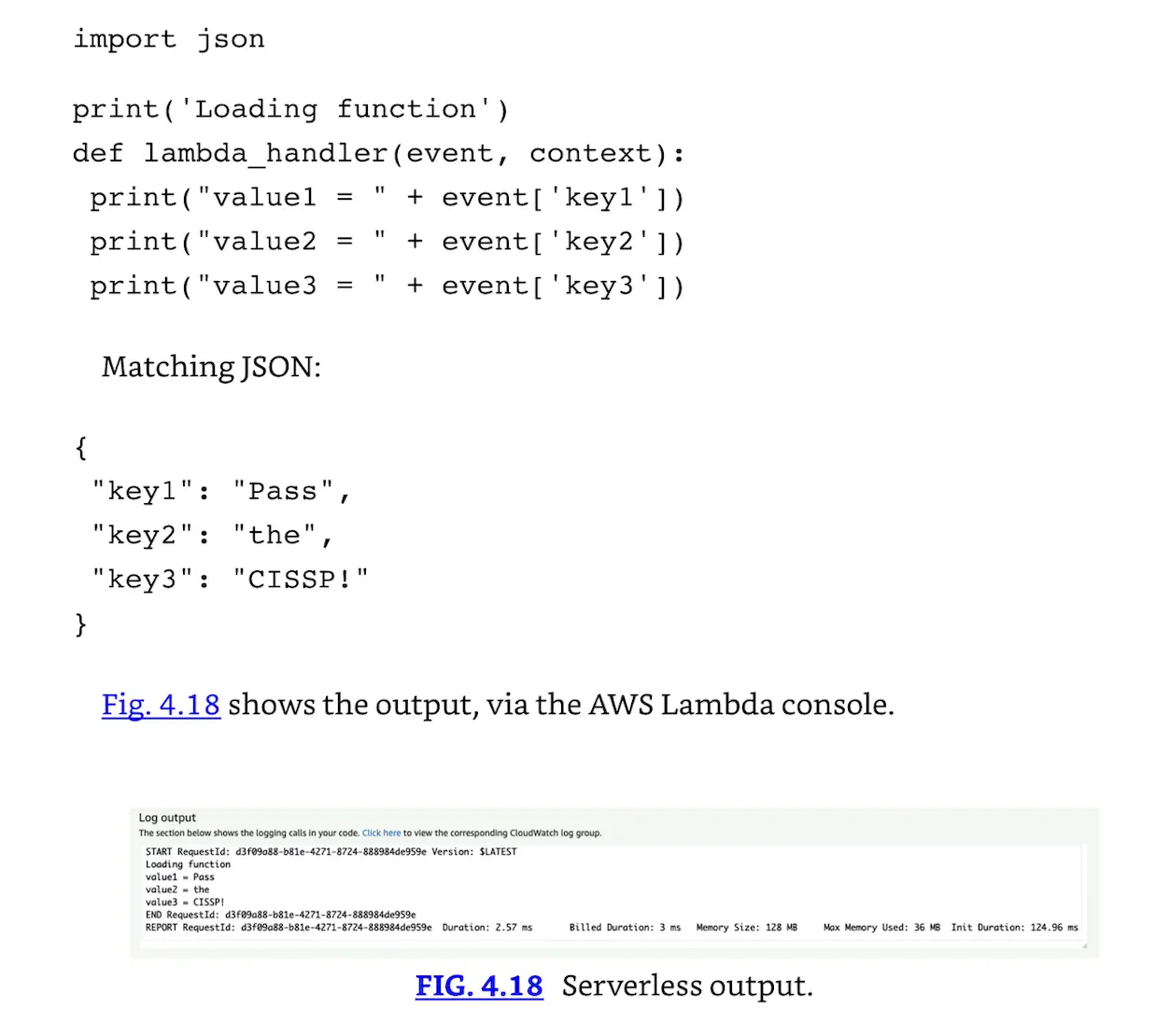

Here’s a simple “Hello World!”-style function [21], using JSON (JavaScript Object Notation). Note that JSON structure is not testable on the exam; it is used here to provide a simple example of serverless code.

Serverless output.

Serverless output.

We have run functions like this for decades on our own CPUs. In this case, the function was sent to AWS, and AWS’s CPUs executed the function and returned the results. AWS charges per CPU cycle required to execute the function. This is much more efficient than running that code on a physical host or a virtual machine (where hundreds of other processes are running and consuming CPU cycles), or a container (which uses fewer CPU cycles than a physical host or VM, but more than serverless).

High-Performance Computing (HPC) and Grid Computing

Both high-performance computing (HPC) and grid computing seek to achieve high computational performance via large numbers of computers. The key distinction: high-performance computing systems (also known as supercomputers) leverage parallel computers at a single location (all typically working on the same task), while grid computing uses massive amounts of distributed computers (working on a variety of tasks).

HPC systems leverage massive amounts of CPUs to achieve quadrillions of floating point operations (FLOPs) per second. Common HPC applications include sequencing DNA, autonomous driving, weather prediction, and much more. The primary purpose of HPC systems is to allow for increased performance through economies of scale. One of the key security concerns with HPC systems is ensuring data integrity is maintained throughout the processing. Often HPC systems will leverage some degree of shared memory on which they operate. This shared memory, if not appropriately managed, can expose potential race conditions that introduce integrity challenges.

Grid computing typically leverages the spare CPU cycles of devices that are not currently needed for a system’s own needs, and then focuses them on the goal of the grid computing resources. While these few spare cycles from each individual computer might not mean much to the overall task, in aggregate, the cycles are significant. SETI@HOME was a famous example of grid computing: it was “a scientific experiment, based at UC Berkeley, that uses Internet-connected computers in the Search for Extraterrestrial Intelligence (SETI)” [22].